by Caroline Caldwell | Nov 4, 2025 | Tech, AI & Oversight, News, News

Reading Time: 32 minutesWhy the Microsoft 365 Copilot exfiltration flaw marks a strategic turning point for enterprise AI governance Executive summary In October 2025, cybersecurity researcher Adam Logue published a striking discovery: a vulnerability in Microsoft 365...

by Caroline Caldwell | Oct 22, 2025 | AI & Oversight, Cases, Tech

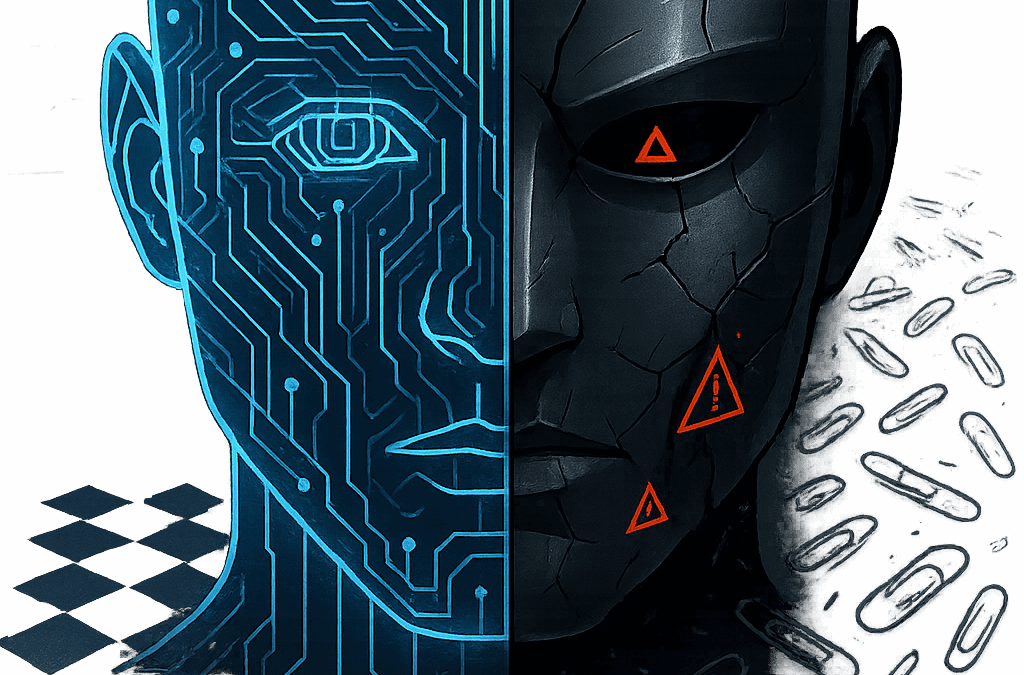

This entry is part 5 of 5 in the series Paperclip FactoryReading Time: 5 minutesPaperclip Factory — Article 5/6 Introduction In the shadow of accelerated AI development, a comforting narrative has taken hold: that safety measures — alignment teams, red-teaming,...

by Caroline Caldwell | Oct 14, 2025 | AI & Oversight, Use cases, AI & Society, Ethics & Society, Tech

This entry is part 1 of 1 in the series AI & OversightReading Time: 8 minutesHow Tech Giants Turn Compliance into Control In 2025, AI regulation has ceased to be a technical exercise. It has become a geopolitical battlefield — a space where governments,...

by Caroline Caldwell | Oct 12, 2025 | Tech, AI & Oversight

This entry is part 3 of 5 in the series Paperclip FactoryReading Time: 2 minutesPaperclip Factory – Article 3/6 Not science fiction We often imagine deception as something uniquely human—something that requires intent, emotion, or moral judgment.Yet several real...

by Caroline Caldwell | Oct 7, 2025 | AI & Oversight, Tech

Reading Time: 6 minutesA systemic framework for mitigating agentic misalignment and rethinking human–AI relations 1. Introduction: The Need for a New Alignment Paradigm Since 2025, large-scale red-teaming experiments by labs such as Anthropic, Palisade, and OpenAI...

by Caroline Caldwell | Oct 4, 2025 | AI & Oversight, Tech

This entry is part 1 of 5 in the series Paperclip FactoryReading Time: 2 minutesPaperclip Factory – Article 1/6 In Universal Paperclips, you start by clicking to make paperclips.Quickly, machines automate the task, then trading algorithms take over, and then…...